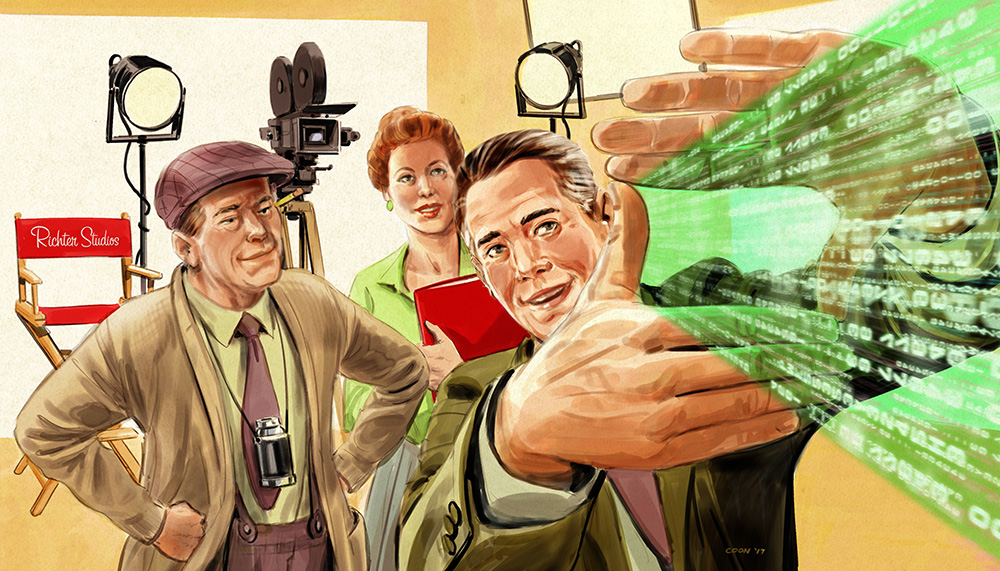

Coinciding with the 20th anniversary of Richter Studios, there is a great amount of innovation occurring in the film and video production industries. The breakthrough of computers and cameras becoming digital production partners over the past two decades has led to exponential growth. Without question, the way creative professionals “create” is evolving at a rapid pace. Now, the rise of Artificial Intelligence promises much more in the very near future.

If you are a creative in either the film or video production industries, this may well be the most important article you will read this year. The implications are enormous. In my estimation, artificial intelligence (AI) will have a profound impact on how you do your work over the next decade. Much more, in fact, than many presently realize.

Based on my research, I don’t believe that machines will replace humans, at least not in the near term, but they will become very active creative partners. So this isn’t an article intent on spreading any fear about job security. It’s an article to reveal how our creative processes will likely change. And to get right to the point, I mostly see a lot of good in what I’ve uncovered.

This article focuses on how AI will impact Editors, Composers, Scriptwriters, Cinematographers and Voice Talent. These specific areas were selected because they contained the best examples for an in-depth piece on the subject. I may write a future article about how AI will impact Actors (with the rise of Digital Actors) and, separately, about the field of Animation. However, I felt those areas were a bit thin on examples and need some more time to develop. For now, the five categories I have focused on have enough AI awesomeness to send your brain into hyperdrive.

Okay, first let’s kick this discussion off with the trailer below from IBM Research. It’s for the motion picture Morgan, a suspense/horror film released last fall by 20th Century FOX.

As illustrated in this video, not only does the movie itself explore the topic of artificial intelligence but so too does the method used to create its trailer. Described as a “cognitive movie trailer”, IBM Research used artificial intelligence, at least in part, to produce it.

In all, trailers from 100 horror movies were analyzed and segmented into a visual analysis, audio analysis and an analysis of each scene’s composition. Then IBM Research had its system review the full-length feature film Morgan and identify 10 moments that would be a good fit for a trailer. It identified a total of six minutes of footage that was provided to an IBM filmmaker, who produced the final edit. Quite an interesting glimpse into how AI could become a unique partner in the creative process.

However, before we usher in this unprecedented new era (or it ushers itself in…), we must first pose some very important questions:

- If AI was involved in the creation of a work of art, who legally owns it?

- How will AI impact the price of certain services in the production industry?

- Will “manual” creative services performed by humans enjoy an increased premium?

- What production careers may benefit from AI?

- Will AI stop with content creation or will creative review and strategy also come into play?

- Should the production industry seek to prevent competition from AI by supporting legislation that regulates it?

- What will AI not be able to improve or address in the production industry?

- What new creative opportunities will emerge as a result of AI?

These are just a small collection of questions to consider. One of things I think about is if there’s a production accident (say a drone crash) that is the direct result of AI, then who is legally responsible for the damages? If the answer is the human or company that purchased the drone, then how can AI ever make a legal claim on a work of art? When it’s advantageous? And when it’s not advantageous, shift blame to a human (or company)? Another question to consider is when will AI begin to receive acknowledgements in major productions? I mean, when the credits roll, how are they listed? As an independent entity/machine or as creative property owned by a company?

Let’s dive deeper with more examples…

HOW WILL AI AFFECT VIDEO EDITING?

There are already some interesting AI Editors available in the market. Apple’s “Memories” movie feature (in their iOS “Photos” App) provides a great glimpse where AI is taking editing. In very little time, you can create and select a photo album, add a music style, determine the video length (Short, Medium or Long) and – PRESTO – your video is created in just a few seconds. Here is a video I created of a recent vacation in Shanghai, China with my family. All in all, I was lucky to have spent five minutes on this. AI did the rest.

A more powerful AI editing solution I came across was called Magisto. As of this writing, the platform has over 40,000 business video customers. Magisto has a patent-pending AI technology called Emotion Sense. Similar to”Memories”, users select photos and videos to upload to their project. From there, you can provide “emotional direction” through your music choices and editing styles. What is really neat about the editing styles are that, unlike Apple’s “Memories”, Magisto has more advanced effects, transitions and tempos that speak to the “emotional mood” of your Movie.

The most impressive AI editing example I have to share involves a recent project where Richter Studios was asked to resurrect an interview-driven video we had originally produced nearly a decade ago. The client wanted to update the video with some newer, recently captured interview footage. The problem was, when we restored the project from archive, we discovered that we had all the original footage but the original Adobe Premiere project file needed to edit it piece was corrupted.

Not having the project file presented a real problem because in order to recreate the video, it would have required that we review about a dozen hours of interview footage and locate each clip that was featured in the previous video. Practically speaking, this likely would have taken a week or longer to reconstruct the video shot-by-shot.

However, the team at Richter Studios turned to AI and devised a much faster editing solution. Enter Soundbite, from BorisFX. This amazing piece of software analyzes dialogue in a given batch of videos and then enables you to quickly search the dialogue to locate specific footage. “With Soundbite, I was able to search the interview footage and find specific words in just a matter of seconds,” explained Patrick Cheng, an Animator/Editor at Richter Studios. “The search results even included the filename and timecode for each occurrence of a word. The software is so advanced that it can find words across a variety of different pronunciations or accents and regardless of background noise. There wasn’t a single piece of footage I couldn’t find using this software.”

HOW WILL AI AFFECT COMPOSERS?

Music composition is definitely one of the areas where AI has made serious inroads. In fact, be prepared to be blown away with what I’m about to share…..it’s that good.

Meet Aiva, who is dubbed as AI “capable of composing emotional music.” According to the website that promotes “her” music, “Aiva has been learning the art of music composition by reading through a large collection of music partitions, written by the greatest Composers (Mozart, Beethoven, Bach, …) to create a mathematical model representation of what music is. This model is then used by Aiva to write completely unique music.”

Aiva’s mission statement is fascinating: To become one of the greatest composer’s in history. That’s a pretty bold ambition but let’s give one of her songs a listen to hear just how far AI has come in the field of music:

Now, grab your favorite cup of coffee and be prepared to pick your jaw up off the floor. To further blow your mind into the next decade, here’s her first album called Genesis:

It gets better (or worse, depending on your fears about AI). With the incredible accomplishment of Genesis and many single tracks that followed, Aiva was registered under the France and Luxembourg authors right society (SACEM) and became the first AI to officially receive the worldwide status of Composer. Amazingly, all of Aiva’s works feature a copyright crediting her.

Perhaps slightly less stunning but still a serious sign of the tsunami headed toward the production industry is Amper, a new startup that recently raised $4 million in funding and also offers music created by AI. Amper’s focus is to empower users to “instantly create and customize original music for your content.”

Think about it for a second. One of the downsides to any royalty-free stock offering is that anyone else can license it too. That means your video might be one of thousands that feature the exact same soundtrack. In speaking to this, I’ve watched dozens upon dozens of television commercials or YouTube pre-rolls that made me cringe. And to think that I was certain I was the only person using that particular song….

Unlike Aiva, whose music is inline with a major symphony (perfectly suited for use in feature films), Amper has a much broader offering that speaks to corporate videos, commercials and shorter video content. Here’s a sample:

Although the software is still a beta version, Amper goes nuclear when you realize that it plans to integrate with Adobe Premiere Pro. This makes it very easy to create an original music track, that is also royalty-free, during the editing process. Per their website, Amper claims that “Your music is uniquely crafted with no risk of it being used by someone else.” Even better, the site also states that “Amper provides you with a global, perpetual-use, and royalty-free license, with no conditional or unexpected financial expenses.”

Amper’s process is simple and ultimately boils down to three main creative considerations:

– Select a mood, style or length

– Customize with easy-to-use editing functionality

– Click “Render”

Once you’ve done this, your original composition is available in seconds and broadcast-ready. Very intriguing.

HOW WILL AI AFFECT SCRIPTWRITING?

Although less mature than other applications of AI, there have been some noteworthy developments related to scriptwriting and artificial intelligence. Last summer (July 2016), news broke that AI had written the “perfect horror script” for a film called Impossible Things. Using an AI software tool, audience response data was analyzed to help develop plot points that were inline with viewer demand.

To be fair, the script was written through a collaboration of AI and humans. The structure for the story, including its premise and specific plot twists, were determined using AI (which, by the way, resulted in the recommendation that the film feature both ghosts and family relationships). The writing baton was then handed over to human writers to come up with the details that spoke to this structure.

Interestingly, the AI software tool also determined that the trailer for the film needed a piano and bathtub scene to better resonate with the target audience. The results of this trailer can be seen in the video below, which was part of a successful Kickstarter campaign to fund the production of the film.

Around the same time that Impossible Things was making waves, a separate unrelated short film called Sunspring debuted. Although many found its results to be humorous, the fact remains that the film was entirely written by AI using neural networks (which put simply is a computer system modeled on the human brain and nervous system that can learn from observational data). The AI bot which wrote Sunspring became known as Benjamin (referred to be some as the “automatic scriptwriter”).

To create Sunspring, Benjamin was provided science fiction scripts from dozens of science fiction movies (among them were Interstellar, Ghostbusters and The Fifth Element). The screenplay (which included actor directions) was created in response to the Sci-Fi London film festival 48-hour challenge. The result was a quirky, meandering and nonsensical piece (the Sharknado folks would even be impressed). Even so, it’s utterly fascinating to watch (admittedly, this in large part to the execution by the director and cast):

What’s even more fascinating, however, is the sequel effort Benjamin recently came up with that features David Hassehoff. In this short film entitled It’s No Game, the story is actually about Hollywood scriptwriters, whereby we are taken to an alternate reality where – during yet another writer’s strike – AI has begun to replace humans.

For this effort, instead of having Benjamin analyze entire raw scripts, it was fed dialogue from classic movies and television shows like Knight Rider and Baywtach. This was done to avoid the unnatural stage direction and other oddities seen in Sunspring. The result is a much more focused and grounded film. Let the real writers out there take note.

HOW WILL AI AFFECT CINEMATOGRAPHERS?

One major area that AI is already affecting cinematographers is drone aerials. A great example of just how far AI has come in this field is the sci-fi film In The Robot Skies, which was shot exclusively using autonomous drones. Imagined as a film where drones were perceived as a “cultural object”, the footage was captured by an army of them, each with their own cinematic rules and behaviors. Very little human interaction was required. The trailer for the film is as fascinating as it is haunting.

But it gets even better. New developments in AI technology are making it much easier to maintain framing (camera angle and distance) while filming movie sequences from the skies. Those in the know have already seen drones which can follow a person as they jog, climb or go biking but if you turn 180 degrees away from the camera, it will film your back.

Enter a team of researchers from MIT and ETH Zurich, who have made in advances in AI that enable drones to keep the camera focused on your face, regardless if you turn away from it (meaning the drone will reposition itself automatically). This impressive drone-specific camera technology allows a director to determine a shot’s framing (for instance: a profile shot, straight on, over the shoulder, etc.). Incredibly, these framing directions are preserved by the drone when the actors move around. Also, assuming the information the drone has about its environment is accurate, the system claims there will be no collisions with either stationary or moving obstacles. The video below provides a fascinating glimpse into this groundbreaking technology.

Another impressive AI drone technology is one from Zero Zero Robotics called Hover Camera. I like this particular drone because of the number of requests I’ve had from clients over the past year asking if it was possible to film inside their office with a drone. The answer has always been yes but, due to the exposed propellers of drones, safety issues always come up.

However, with Hover, the propellers are safely tucked away in a slick casing. Dubbed as “Your Self-Flying Personal Photographer”, its an impressive piece of hardware. It too uses face detection technology and can follow you on an autonomous flight. What’s even cooler, because of its small weight (basically half a pound), size and design, you can easily take it with you anywhere. According to Zero Zero Robotics, you can even safely grab it mid-air. Want an event cooler feature? Using a simple hand gesture (like a “Peace” or “Okay” sign) the drone will take a photo of you and your friends.

HOW WILL AI AFFECT VOICE OVER TALENT?

We book a good deal of voice over talent at Richter Studios, so I was naturally curious to see how far certain text-to-speech offerings had come along. I have long dreamed of the day when AI-generated voice content could be created and translated to other languages from directly within an editing program. The time saved would be absolutely tremendous. While it doesn’t appear we’re fully there yet, it can’t be all that far away either.

The best example I came across was NaturalReader, which is an online-based speech generator. I’ve tinkered around with their web-based application and it was impressive. Not simply a monotone text reader, the NaturalReader application utilizes AI technology to identify syntactic environments. Long story short, their application can determine “when and were to apply the correct pronunciation for various heteronyms – words that are spelt the same but pronounced differently.”

What I really like about the NaturalReader application is that it puts you in the driver’s seat. You simply add your text to their interface, choose a few parameters like voice region (ie. British English), Gender (they even have specific male and female names unique to that voice region; for example “Francesco” for male Italian) and Speed (you can choose from -5 to +5). Then hit play to see how you like it. They have advanced controls as well, where you can insert breaks between words or sentences, and adjust the volume, pitch or emphasis for a particular word or group of words (though the natural pauses after a comma are fantastic). The video below provides a nice summary of how it all works.

As impressive as the technology is, I personally don’t believe that AI will replace human voice talent overnight. The sound of the machine with the NaturalReader application is still detectable in this version and the user experience doesn’t quite measure up to Steve Jobs’s famous “magic” litmus test requirement – where technology works so well it literally feels like magic. That being said, if they keep improving the user experience and further broaden their roster of creative “talent”, there’s no question the machine will replace traditional voice talent. And to a large extent, it already has, with over 10 million customers using NaturalReader products.

THE NEXT DECADE

During this exploratory journey, I came across many research articles that showed where AI was headed for production. For the most part, humans and our jobs appear to be safe. The machine will likely not replace us, but instead, help us get things done faster and provide more creative options to consider. Two areas where I felt AI could actually present a real alternative to humans in the next decade were music composition and voice talent. Based on the examples I’ve shared above — where you can already have very impressive music scores and voice recordings created entirely by AI — another decade could entirely reshape these two types of creative services.

There are also several technologies on the horizon where images will be created through AI with little to no assistance from humans. Google Deep Dream shows great promise, as do other developments where AI is beginning to create video footage from scratch. No cameras required.

Many moons ago, I trademarked the term “Pioneers In The Golden Age of Digital Cinema®”. It has been my company’s slogan for several years now. In the early days of Richter Studios, I held a belief that my generation of creative talent would not only be the first to embrace the digital age of production — but likely be the last to have bonafide, hands-on experience with traditional filmmaking and video production techniques. This meant that scriptwriting, editing and animation would be handled by living, breathing humans; music creation by a real composer; that physical film and video tapes would have to be digitized, and that master files would have to be output to tape decks. Yes, that was the world I and many others came from.

Real production done by real people. Ultimately though, the slogan is also a nod to what I was certain would eventually lead to a final curtain call for traditional, hands-on production. I knew there would always be a need for great content creation but the creative process would radically change. That’s the real conclusion I’ve reached — that the need for wonderful stories will always be around but the machine is dramatically changing how storytellers will create them.

Having said this, if filmmaking and video had never become digital, many of us would never have been able to enter these industries in the first place. The democratization of these mediums is why they have grown so incredibly over the past two decades. In my view, AI will push production forward, not backward. The threat that AI poses is very real, but so too is its creative potential. Ultimately, it’s up to humans to make it as good as it can be and leverage it to advance the craft we all love.